The proposed design of the robot for Urban Search and Rescue(USAR) assists in locating survivors in the debris, left after a disaster, natural or human-inflicted, rescues them and brings them to safety. In a nutshell, the robot is remotely-controlled to traverse the affected areas, scanning for survivors, provide the controller with real-time visual coverage of the sites, detect and notify abnormal temperatures and on locating, rescuing, securing and carrying the survivor to safety.

Rescue operations are carried out by the robot through imitation of the controller’s gestures i.e. movement of arms and torso, tracked using Microsoft Kinect at the controller room which is located at a safe distance from the disaster site. Imitation of body gestures ensures better efficiency in rescue operations and has greater superiority to other USAR robots.

The design can be broadly classified into three blocks:

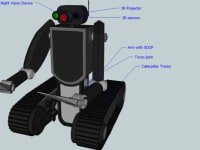

The Robot:

The robot is capable of executing most functionalities of human upper body. It has two arms fitted with temperature sensors, each with 5DOF. Force sensors fabricated on it, control the force applied by arm. Wrists, limited to having heaving motion, are equipped with IR imaging cameras to get a better perspective/precision in assessing the orientation of the affected among the debris. The torso consists of two parts, with a movable lower-portion through a joint, enabling it to assume a better posture for taking the affected into its arms. All terrain locomotion of the robot is enabled by using caterpillar tracks which provide better stability. The joints have powerful servo motors of sufficient torque and these are supplied by PWMs of sufficient width to make rotations of required angles using Arduino Mega 2560 Microcontrollers. The width of PWM signals is calculated, using joint-position data acquired at the controller-side sent to the Arduino serially. The eyes of the humanoid are Forward Looking IR (FLIR) cameras enabling thermal imaging. NVDs(Night-Vision Device) are closely aligned with the former to obtain an almost overlapping image.

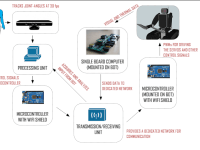

The Controller:

At controller-side, gesture data to be sent to humanoid is obtained through joint-position obtained from Microsoft Kinect, a peripheral, originally designed for gaming purposes. The device tracks 20 essential joints, out of which, tracking 9 joints enable the controller to stimulate locomotion of limbs of robot accordingly. The second controller is responsible for control of torso-joint and tracked locomotion of the robot through a conventional joy-stick.

Interaction Module:

The module provides dedicated wireless network for sending information acquired at controller-side to the microcontroller wirelessly, and the visual and thermal data from robot to the controller-side through a single board computer(WiFi enabled).

Gesture-based design of the robot allows greater flexibility and dexterity, and attempts in abridging the gap between humans and robots. Its human-like upper framework showcases efficiency of a trained rescuer and all-terrain access through tracked wheels makes it suitable for any arduous responsibilities. It possesses great market potential as such robots are not available in the market. Clearly, commercialization of the product after successful prototype design will require extensive support from the industry and the government.

Like this entry?

-

About the Entrant

- Name:Anandkumar Mahadevan

- Type of entry:teamTeam members:Rajesh Kannan Megalingam,Assistant Professor,Amritapuri Campus,Amrita Vishwa Vidyapeetham University

Anandkumar M, Third Year B-Tech Student,Amritapuri Campus,Amrita Vishwa Vidyapeetham University

Ashis Pavan K,Third Year B-Tech Student,Amritapuri Campus,Amrita Vishwa Vidyapeetham University

- Software used for this entry:SmartDraw, Google SketchUp

- Patent status:none