Cameras are key enablers of self-driving and advanced driver assistance systems (ADAS) that are revolutionizing the safety, comfort, and efficiency of transportation. However, they suffer from a fundamental flaw: They capture images at fixed intervals – typically 40-45 frames per second (FPS) – during which they are effectively “blind”, and this blind time is further extended in poor lighting conditions and high-speed scenarios, where effective decision-making requires multiple images. This means that potential hazards like fast-moving pedestrians, vehicles, or debris can be missed which reduces safety. Reducing the blind time requires increasing the framerates of these cameras, but this strains data collection and processing: Self-driving cars already collect upward of 11 terabytes per hour, which is projected to reach 40 terabytes in the coming years.

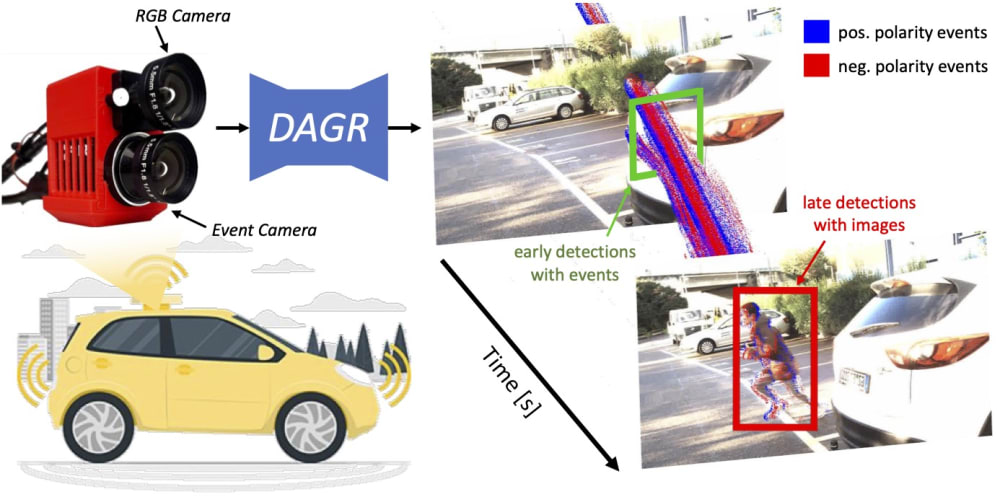

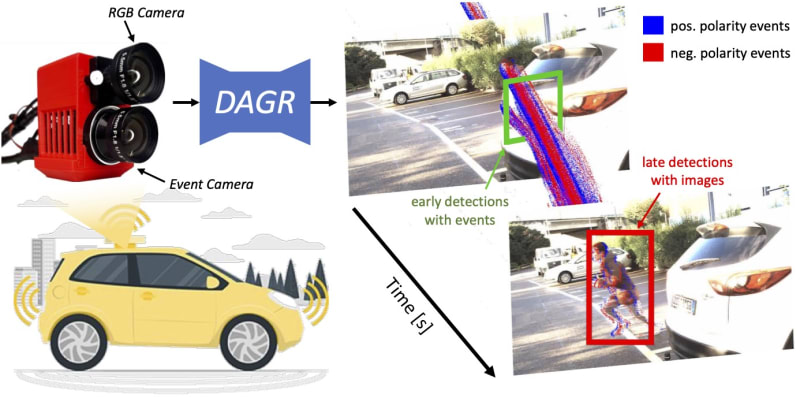

Our invention, DAGR, published in Nature, addresses this challenge by combining a traditional low-framerate camera (20 FPS) with a bio-inspired event camera for high-speed traffic participant detection with the latency of a 5,000 FPS camera but 100 times less data. Event cameras, inspired by the human retina, have independent motion-sensitive pixels that only transmit sparse and asynchronous data, called events. These events efficiently capture high-speed motion patterns in the blind time between images, with microsecond latency, low motion blur, and a high dynamic range. Thus, DAGR improves detections especially during challenging high-speed motion, and lighting conditions, at night, when exiting a tunnel, or during sunset, where traditional cameras suffer from saturation or blur.

DAGR detects traffic participants in two stages: It extracts preliminary detections from images using a Convolutional Neural Network (CNN), and then efficiently updates these detections with low-latency events using an innovative Asynchronous Graph Neural Network (AGNN). The key to DAGR’s efficiency is in the way it processes events as sparse and spatio-temporally evolving data structures that, unlike previous data structures, are non-redundant and efficiently updatable as new events arrive. This innovation cuts computation by a factor of ~4,800 compared to previous algorithms, translating into energy and computation time savings.

DAGR performs on par with existing camera systems while cutting compute, data, and reaction times for downstream decision making. It can thus augment the safety and reduce the data requirements of existing self-driving and ADAS systems. The sensors in DAGR are commercially available and based on mature CMOS technology. Moreover, our prototype algorithm is compatible with existing neuromorphic hardware tailored to sparse computation, such as the Loihi from IBM, or the Kraken, which has been successfully deployed on nano-drones. Finally, with growing automation in transportation, the marketability of DAGR is clear. Enhanced safety, efficiency, and reduced system complexity position our innovation favorably for automotive manufacturers, suppliers, and technology integrators.

Our invention represents a substantial innovation in automotive safety and efficiency, and beyond, promising to transform perception paradigms. In particular, DAGR can also deliver value in other domains like high-speed automation, sorting, or monitoring, or in embedded always-on devices where power, data, and speed are of tantamount importance.

For more details, check out our Nature article here: https://www.nature.com/articles/s41586-024-07409-w.

Video

Like this entry?

-

About the Entrant

- Name:Daniel Gehrig

- Type of entry:teamTeam members:

- Davide Scaramuzza

- Software used for this entry:Yes, software implementation can be found here: https://github.com/uzh-rpg/dagr

- Patent status:none