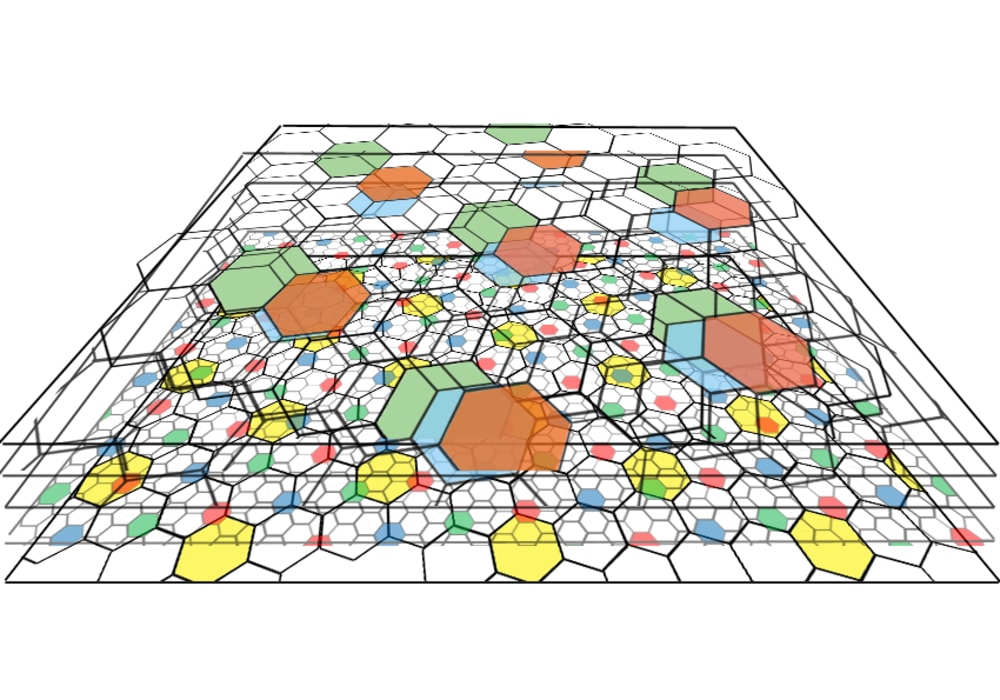

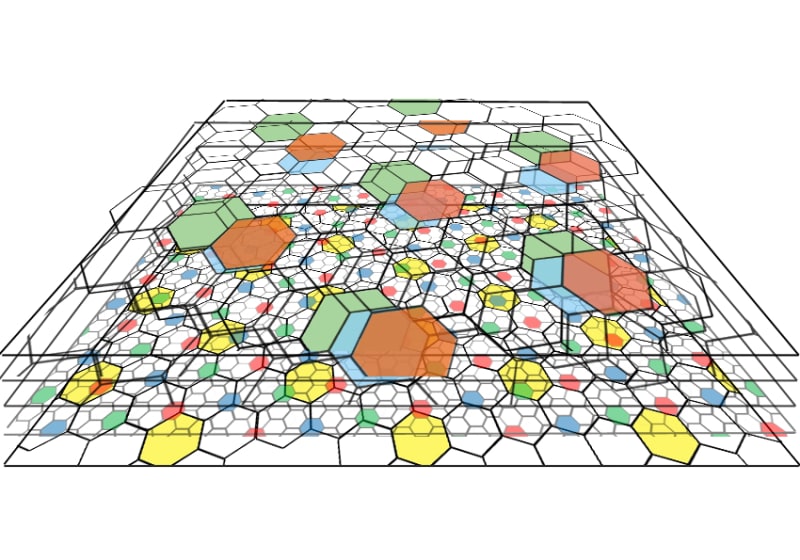

The assumption behind EYEYE system is that it is essential to understand information transfer in a networked system if the system is to develop an internal worldview, which is essential for creation of meaning. The goal of EYEYE system is to create an autonomous deep learning system that can deal with the complete set of possible states of 2D changing patterns, where fast-data-inputs are classified and memorized in modules composed of cellular networks housed in a specialized architecture. Queries, recall, recognition, and prediction are all part of the system, whose essence lies in distributed memory, asynchronous independent cellular calculations, and internal up and down feedback. Taking vision as an example, but that can also be applied to any other data gathering device, the main problem lies in trying to deal with variability and complexity of inputs. The system states are so numerous that they are way beyond any possibility of a priori memorization and classification, except if the input system parcels the input patterns into small enough regions with limited number of input states or patterns, and allows each region to classify and recombine independently its state in an asynchronous way with the surrounding nodes.

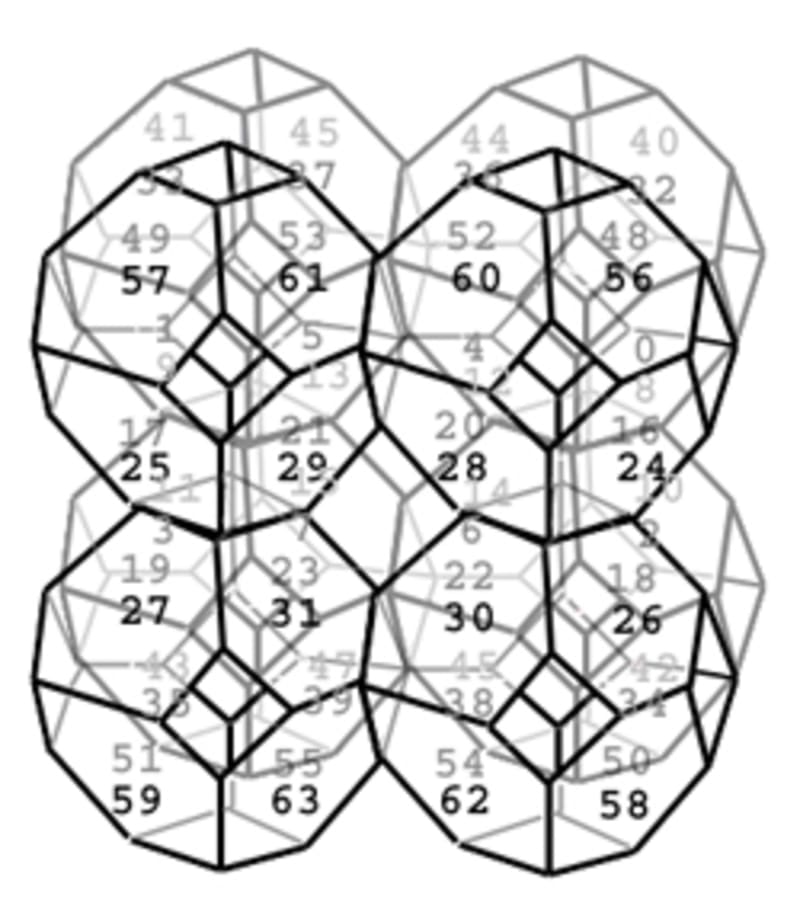

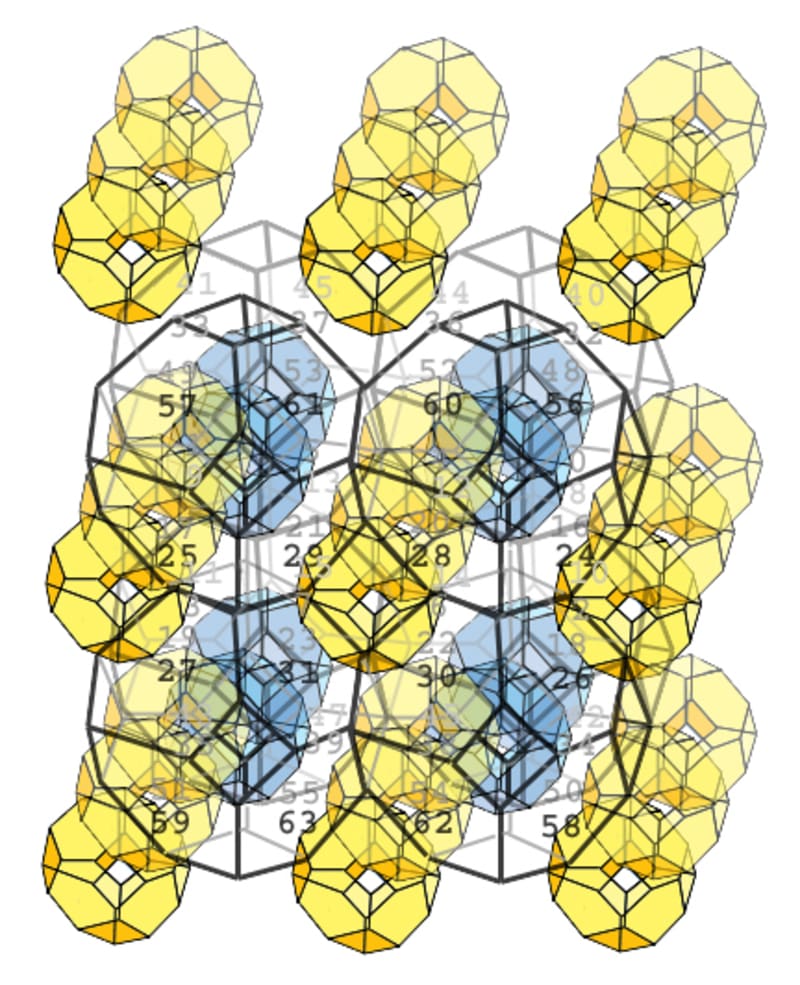

The problem of state space is resolved by dividing patterns into local “visual-primitives” (64) capable of dealing with local state space, and sharing that info with surrounding local units as well as with higher and lower levels. Letters in a phonetic language represent a unit sound, and then they can be strung together to form innumerable words. Having 64 basic patterns, where each pattern represents a “pictorial sound” in a given region of space, makes it possible to compose any 2D shape (“pictorial word”) with them. The exact position in 2D space of that word does not need to be remembered, because the architecture already places it in a specific region. The Cambridge scrambled-text-example shows that “reading” is possible as long as all the sounds (letters) are included, and the edge letters (first and last letter) are in their place. In EYEYE system the “pictorial word” is memorized in a 3D symbolic space-network composed of hash units that contain similar “pictorial sounds”, so that variation would not affect recognition. Live data is obtained in parallel fashion by many independent sensory cells. Each cell accepts a limited number of primitives that cover all the possibilities of its phase space, and uses simple software to analyze and output results to higher-level sensory cell.

Each module starts processing 2D perceptual input pattern immediately, abstracts it in successive layers, and memorizes it as a network of active nodes. Then it transmits those active networks as a 2D perceptual input pattern to other modules. It can recall, recognize, and predict memorized state patterns of its local environment in real time. The transmitted patterns can spread to various other modules, or can recombine with other transmitted patterns into a new 2D perceptual input pattern, creating opportunities for complex information sharing, recombining, and distilling of needed output. (Based on 2 US patents.)

Like this entry?

-

About the Entrant

- Name:Neven Dragojlovic

- Type of entry:individual

- Software used for this entry:Python coded with sql memory

- Patent status:patented